The role of software in the automotive industry is evolving rapidly, and container technology is a key enabler for the Software-Defined Vehicle (SDV). Existing container orchestration tools are designed for environments like private or public clouds. Safety-critical systems such as cars, however, require stringent operational patterns that impose different requirements on the tooling.

Addressing the tooling requirements for SDVs necessitates workload orchestration with extremely limited overhead. This is what the Eclipse BlueChi project was created to provide — a multi-node service controller for deterministic systems with limited resources. It is written in C and integrates seamlessly with systemd via its D-Bus API relaying D-Bus messages over TCP for multi-node support.

This integration allows BlueChi to leverage the years of development and bug fixes from systemd’s inception in 2010, and avoids re-inventing the wheel for non-trivial tasks, such as service dependencies and service startup optimization. It also enables BlueChi to run anything that the system supports, such as services, timers, and slices, and allows the application to be run bare-metal or in a container.

BlueChi's Architecture: Controller, Agent, CLI Tool

BlueChi is meant to be used in conjunction with an external state manager that knows the desired state of the system(s). BlueChi only knows how to transition between states, not the desired final state of the system. It does not act on changes in the state of services or connection of nodes. And it assumes that initial setup has been handled in the desired state and manages transitions from there.

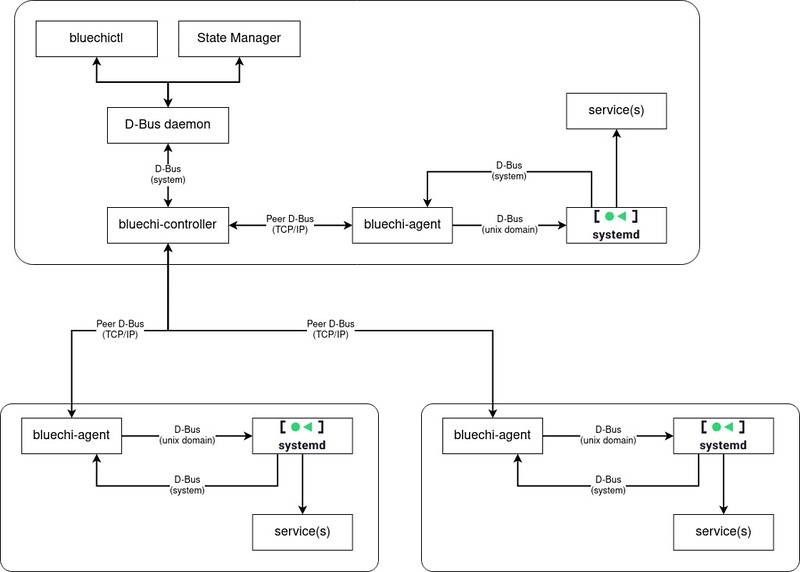

To accomplish this, BlueChi is built around three components (Figure 1):

- bluechi-controller, which runs on the primary node and controls connected agents

- bluechi-agent, which runs on each managed node, relaying commands from the controller to the local systemd

- bluechictl, which is a CLI tool meant to be used by administrators to test, debug, or manually manage services across nodes

The bluechi-controller and bluechi-agent can also run alongside each other on the same system (also called a node).

The controller service runs on the primary node and, after initial startup and loading of its configuration from files, waits for incoming connection requests from agents. Each node has a unique name that is used to reference it in the controller.

The agent service runs on each managed node. When the agent starts, it also loads its configuration, connects via D-Bus over TCP/IP to the controller, and registers as available (and optionally authenticates). Subsequently, the agent is able to receive commands from the controller and reports local state changes to it.

The image below depicts an overview of BlueChi’s architecture with the previously described components:

Using BlueChi Requires Custom Applications

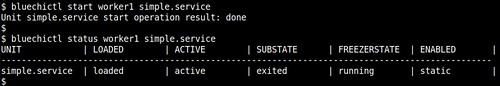

If you are interested in using BlueChi, the Getting Started guide provides instructions for setting up a single node and a multi-node system. Once set up, the system can be controlled via bluechictl:

Since bluechictl is meant to be used for manually managing services, custom applications have to be implemented to tackle more sophisticated use cases, such as a state manager.

BlueChi provides its public API on the local system bus so that other applications can interact with it. For example, the following Python snippet retrieves a list of all nodes that are expected to be managed by BlueChi and shows their name and connection status:

#!/usr/bin/python3

import dasbus.connection

bus = dasbus.connection.SystemMessageBus()

manager = bus.get_proxy(“org.eclipse.bluechi”, “/org/eclipse/bluechi”)

nodes = manager.ListNodes()

for node in nodes:

# node is a tuple consisting of (name, object_path, status)

print(f”Node: {node[0]}, State: {node[2]}”)The BlueChi documentation contains more examples for information retrieval, unit operations like start and stop, as well as monitoring the status of services. The examples are written in Go, Python, and Rust and use plain D-Bus libraries. So, reading BlueChi’s introspection data is a necessity to know the available operations. By generating clients based on this data, however, programming custom applications becomes more convenient.

Crucial to Resolve Cross-Node Dependencies

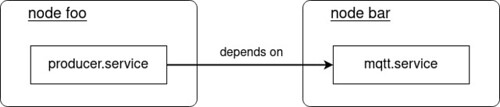

In a distributed system, dependencies between applications running on different nodes is a likely scenario. Consider the following example:

In the system depicted above, a producer.service collects sensor data and provides it via an MQTT broker to other services. Both services are running on different nodes. The producer, however, requires that the MQTT service is running. This cross-node dependency can be resolved either by an external state manager or BlueChi’s Proxy Service feature. The latter doesn’t require any additional application(s) and is relying only on already existing mechanisms. Using this feature, the example above could be implemented with systemd’s Wants= in combination with After=:

# producer.service

[Unit]

Wants=bluechi-proxy@bar_mqtt.service

After=bluechi-proxy@bar_mqtt.service

[Service]

# ...If you are interested in using BlueChi and have to resolve cross-node dependencies, a step-by-step tutorial will get you started. Further, you can explore the Proxy Service documentation, as well as examples on how to use them, such as restarting a required service if it fails.

Tooling Around BlueChi Enhances Flexibility

Since BlueChi builds on systemd as a foundation, it seamlessly integrates with Podman's ability to run containers under systemd in a declarative way using Quadlets.

And as mentioned in a previous section, generated clients for BlueChi's API simplify developing custom applications. The project also provides typed Python bindings to make using the API even easier.

BlueChi is used within the QM project, which provides an environment that grants Freedom From Interference (FFI) for applications and containerized tools. A bluechi-agent runs inside the isolated QM environment and is controlled from outside QM by a bluechi-controller, which manages services inside.